09. Map to World Coordinates

Map to World Coordinates

Work through the steps below and complete the Map to World coordinates quiz!

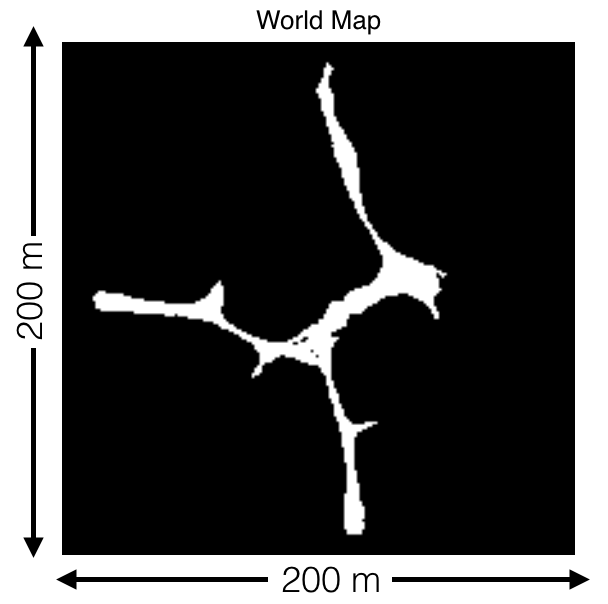

The environment you will be navigating with the rover in this project is roughly 200 x 200 meters and looks like the image above from a top-down view. The white areas represent the navigable terrain. You will be provided a copy of this map with the project at a resolution of 1 square meter per pixel (same as shown above). Your goal in this exercise is to write code that will allow you to use the rover's position, orientation and camera image to map its environment and compare against this ground truth map!

Rotation and Translation

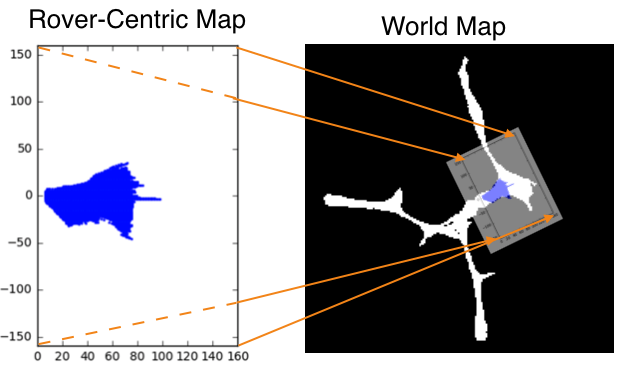

Now that you have generated a map of navigable terrain in rover-centric coordinates, the next step is to map those points to world coordinates. To do this, you'll use a rotation followed by a translation. The rotation is required to account for the fact that when the camera takes a picture, the rover can be pointing in any arbitrary direction, given by it's yaw angle. The translation is to account for the fact that the rover may be located at any position in the world when it takes a picture. So, it's a 2 step process:

- Rotate the rover-centric coordinates so that the x and y axes are parallel to the axes in world space.

- Translate the rotated positions by the x and y position values given by the rover's location (position vector) in the world.

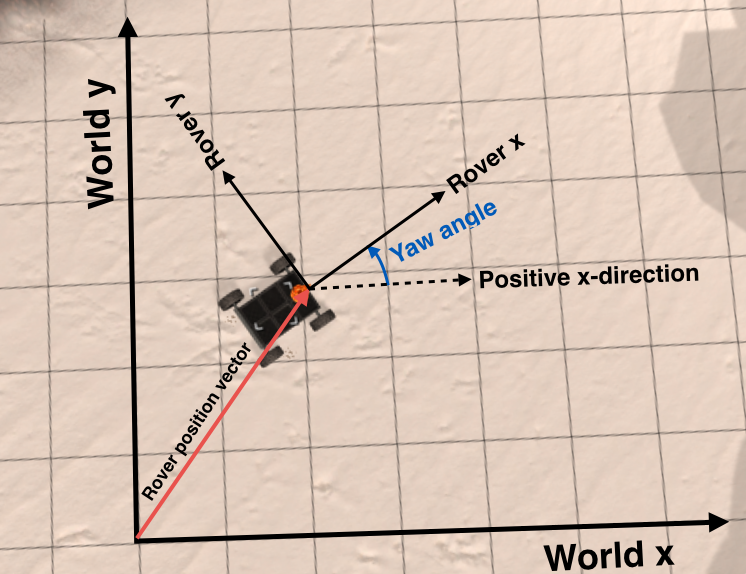

Let's start with the rotation. The yaw angle is measured counterclockwise from the positive x-direction like this:

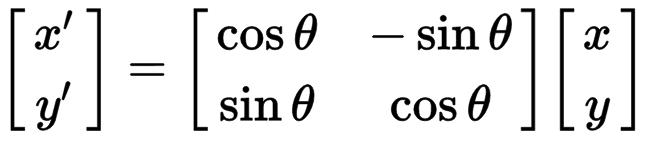

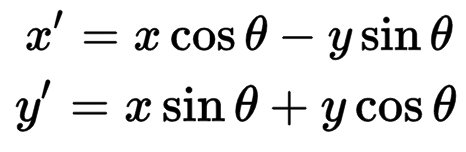

The first task is to perform a rotation. You'll accomplish this by applying a rotation matrix to your rover space pixel values (let's call them xpix and ypix). For a rotation through an angle \theta, the operation looks like this:

Or written out explicitly it looks like this:

Or in code, you could write it up like this (passing in xpix and ypix as our original pixel positions and yaw as our yaw angle:

import numpy as np

# yaw angle is recorded in degrees so first convert to radians

yaw_rad = yaw * np.pi / 180

x_rotated = xpix * np.cos(yaw_rad) - ypix * np.sin(yaw_rad)

y_rotated = xpix * np.sin(yaw_rad) + ypix * np.cos(yaw_rad)The next step in mapping to world coordinates is to perform a translation by simply adding the x and y components of the rover's position to the x_rotated and y_rotated values calculated above.

Keep in mind, however, the scale associated with pixels in rover space versus world space. If, for example, as suggested in the previous exercise, you have mapped pixels in rover space such that each represents 0.1 x 0.1 m, and in your world map (as will be the case in the project) each pixel is 1 x 1 m, then you need to divide your rover space pixel values by 10 before mapping to world space. In that case, assuming the x and y position of the rover are given as xpos and ypos:

# Assume a scale factor of 10 between world space pixels and rover space pixels

scale = 10

# Perform translation and convert to integer since pixel values can't be float

x_world = np.int_(xpos + (x_rotated / scale))

y_world = np.int_(ypos + (y_rotated / scale))Ultimately you would like to add these new pixels to your map, but you may have inadvertently generated values that fall outside your map if the rover was near the edge of the world, so you should also truncate the values to be within the allowable range given by the map size using the np.clip() function.

# Assume a mapsize of 200 x 200 pixels (for our 200 x 200 m world)

world_size = 200

x_pix_world = np.clip(x_world, 0, world_size - 1)

y_pix_world = np.clip(y_world, 0, world_size - 1)Alright, so let's put it all together! Your goal in this next exercise is to write two functions, one to apply a rotation and one to perform a scaling and translation. I'll provide you with a third function that applies these two in succession and then does the clipping step. So it'll look like this:

# Define a function to apply a rotation to pixel positions

def rotate_pix(xpix, ypix, yaw):

# TODO:

# Convert yaw to radians

# Apply a rotation

# Return the result

# Define a function to perform a translation

def translate_pix(xpix_rot, ypix_rot, xpos, ypos, scale):

# TODO:

# Apply a scaling and a translation

# Return the result Note: in the exercise below, we're choosing random numbers for yaw and position, such that running the code multiple times will produce different outputs each time.

Start Quiz: